Codemod AI: An iterative system for auto-generating codemods

Codemods are essential for large-scale codebase modernization but building them can be burdensome and requires deep expertise in programming languages and the codemod engine API. Although AI has shown some promise in automating software engineering tasks, achieving the necessary accuracy for codemods is still challenging. At Codemod, we address this challenge by introducing an iterative process that significantly improves the quality of LLM-generated codemods. The key idea is that developers iteratively improve codemods to achieve the desired outcome. Codemod AI embraces the same philosophy.

LLMs fall short in generating codemods.

Large Language Models (LLMs) like GPT-4o have shown advances in code generation. A subset of that is codemod generation. To generate a codemod, a user would provide a pair of “before” and “after” code examples, and optionally, some description of the transformation logic as input. The LLM analyzes this input, identifies the required transformations, and attempts to generate a codemod to replicate the desired change.

There is no doubt that LLMs leverage their training on a large number of codebases to 1) analyze the provided “before” and “after” code examples in a chosen programming language (e.g., javascript), and 2) generate a codemod in the codemod engine of choice (e.g., jscodeshift). This can potentially automate a significant portion of codemod creation process.

Despite their strengths, vanilla LLMs often face limitations in codemod generation. A significant issue arises from the LLM’s lack of deep understanding of a codemod engine’s internal mechanisms. This can lead to codemods that suffer from 1) type and syntax errors which are caught by compilers, 2) execution errors which are only caught by running the codemod, and 3) functionality errors which are found by comparing the expected output with the actual output that the codemod generates. Without specialized guidance, LLMs may struggle to generate complex transformations that involve nuanced changes to code structure or logic. We have conducted some experiments on generating codemods for 170 pairs of “before” and “after” code examples drawn from Codemod public registry to study the extent of these issues. Despite using our expert-curated prompt, vanilla GPT-4o generated jscodeshift codemods that correctly transform the given “before” code to the given “after” code example in 45.29% of the cases. Among the incorrect cases, 24.71% of the issues were type or syntax issues that were caught by the TypeScript compiler (tsc), 11.76% of the issues were execution errors that were encountered after running the generated codemod on the “before” code snippet, and the rest (18.24%) were free of compiler or execution errors but resulted in a codemod that did not make the desired transformation.

The most common kinds of Compiler Errors that generated codemods encountered were missing property (e.g., Property 'X' does not exist on type 'Y') and incorrect function arguments (e.g., Expected N arguments, but got M). These errors point to a lack of understanding of the codemod engine’s internal workings. Note that this happens even though jscodeshift has been around for several years now. These kinds of issues are amplified if the LLM is used for a new codemod engine or even if an established codemod engine changes its structure.

We are introducing a new iterative approach to codemod generation.

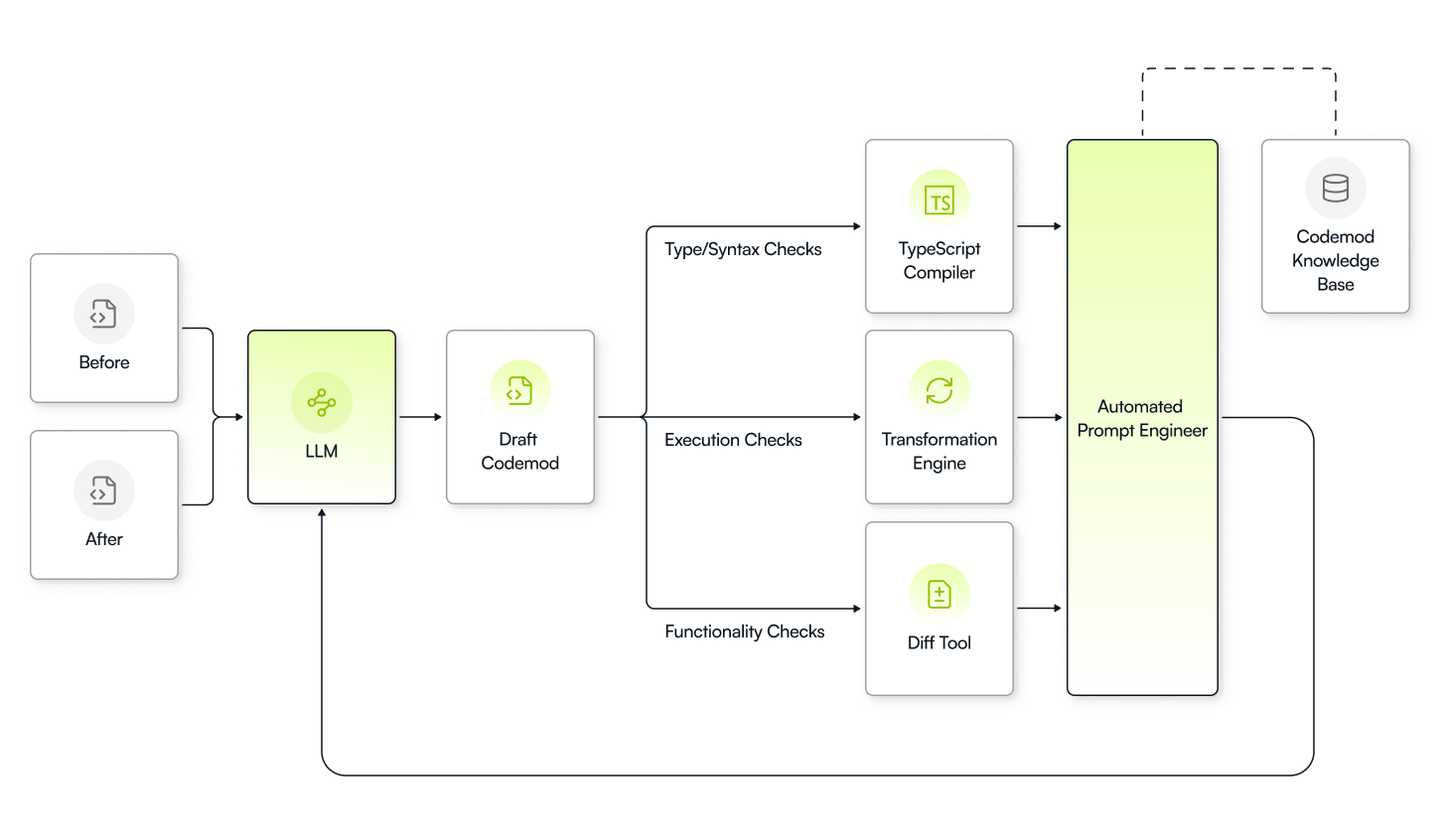

Our iterative AI approach introduces a feedback loop that significantly improves the accuracy of generated codemods. The following diagram shows an overview of the described approach.

Overview of iterative codemod generation.

The user begins by providing a pair of “before” and “after” code examples in Codemod Studio. The LLM is prompted using a carefully crafted prompt which is fine-tuned for the task of codemod generation using the codemod engine of choice (e.g., jscodeshift) which results in generating what we call a “draft codemod”.

This draft codemod then undergoes iterations of analysis. Engineering tools such as the TypeScript compiler, jscodeshift codemod runner, and diff calculator examine the generated codemod and its output to detect syntax or type errors, runtime errors, or functionality issues. This comprehensive feedback is then cross-referenced with a knowledge base tailored to the specific codemod engine in use. This knowledge base is a repository of available types, properties, and functions for the specific codemod engine in addition to common issues, potential solutions, and hints.

Armed with the feedback from the tools and insights fetched from the knowledge base, the LLM iteratively revises the codemod. This cycle of generation, analysis, and refinement continues until the system achieves a correct codemod, or a predetermined iteration threshold is reached.

The first version of Codemod AI boosted the success rate of auto-generating codemods from 45% to 75%.

The primary advantage of our iterative AI approach is the improved accuracy of the generated codemods. By incorporating static and dynamic tools and a specialized knowledge base, we guide the LLM to a solution that is not only syntactically correct in the context of the target programming language but also syntactically and semantically correct in the context of the codemod engine. This results in a significant improvement over the output of vanilla GPT-4o.

The feedback loop enables targeted refinements. Rather than blindly revising, the LLM receives precise feedback based on deterministic static and dynamic analysis. Additionally, by consulting the knowledge base, the LLM can better deal with complex situations.

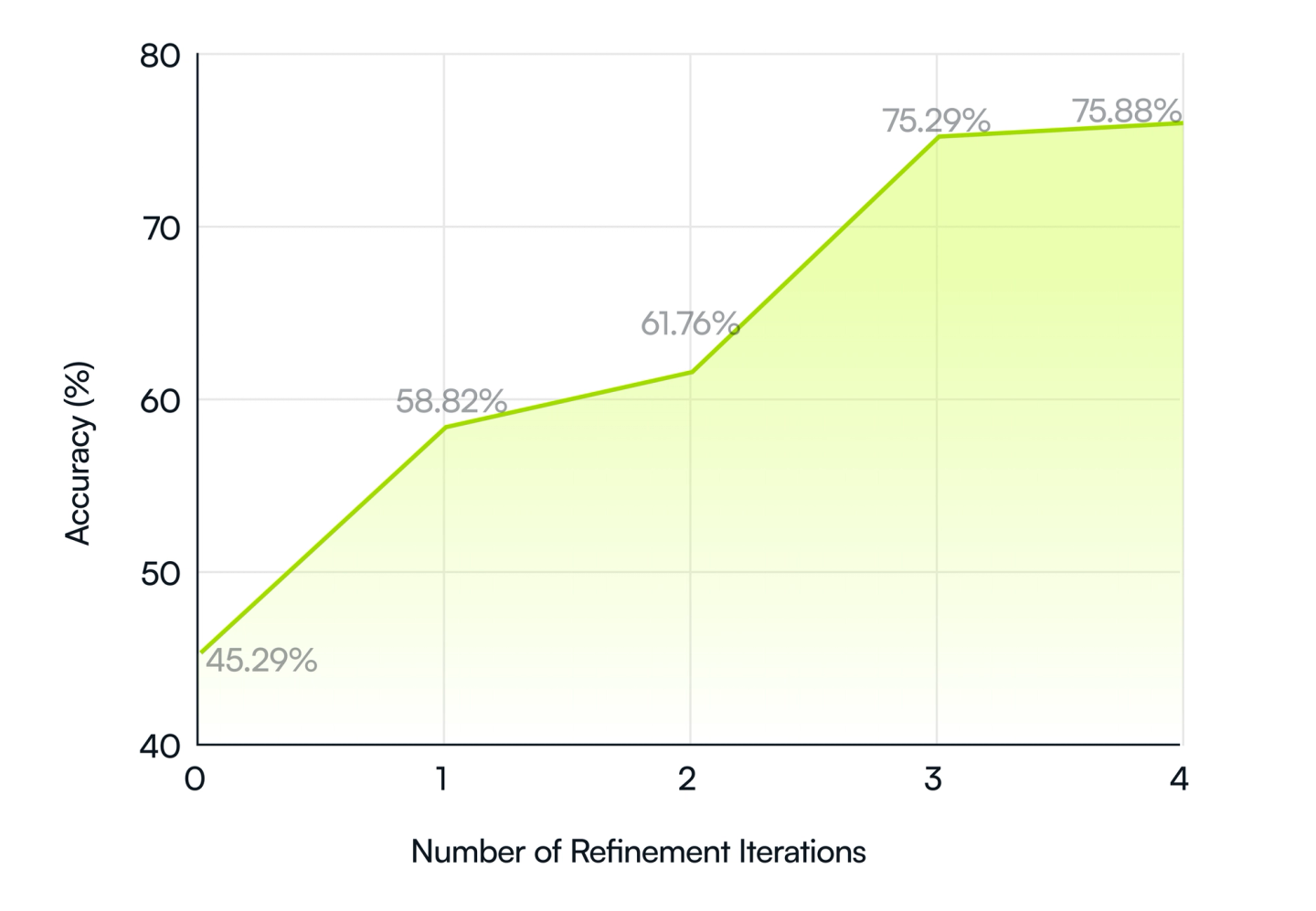

We have collected quantitative results showcasing the advantage of our approach over vanilla GPT-4o. As the chart below shows, not having any refinement iterations (i.e., using vanilla GPT-4o) results in an accuracy of 45.29%. Increasing the number of refinement iterations increases the accuracy. In particular, using one, two, or three refinement iterations results in accuracies of 58.82%, 61.76%, and 75.29%, respectively. We observed that using four iterations has an insignificant effect on the accuracy (+0.59%).

The pie chart below compares Codemod's new AI system with vanilla GPT-4o and expert-curated prompts.

Accuracy of auto-generated codemods.

What’s next for Codemod AI

For our current evaluation, we only considered 1 pair of examples for each codemod. This could result in a lack of generalizability of the auto-generated codemods. In the future, we aim to consider codemods with multiple before/after examples.

We have built Codemod AI to easily be used for any codemod engine. In the future, we will add support for more engines.

Key Takeaways

The limitations of vanilla LLMs for codemod generation highlight the need for specialized approaches. Our iterative AI approach offers a solution by integrating static and dynamic software engineering tools, a specialized knowledge base, and a targeted feedback loop to guide the LLM toward more accurate codemods. While this method outperforms vanilla LLMs, it can still benefit from their advances in the context of code. We conclude this article by inviting you to try Codemod AI yourself and provide feedback.